This article repeats one we have just posted on LinkedIn.

So now that the dust has settled a bit after Friday’s UK Blackout ‘event’, what do we know and what have we learnt? I have put together a slightly longer post, which hopefully draws together some of the threads. At this point the post invariably contains a lot of speculation, so should be taken with a pinch of salt.

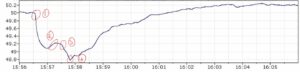

The story so far, is that the Little Barford CCGT plant tripped at 4:56pm, taking with it around 720MW of generation. Then, the Hornsea Wind Farm tripped two minutes later at 5:00pm. National Grid are claiming that these two events were unrelated, and it was this that caused the ‘unprecedented situation’. National Grid then claimed that their system responded as planned, and the various under-frequency / Rate of Change Of Frequency (ROCOF) relays around the UK kicked in to act as a load shedding system. First let’s have a look at the National Grid frequency graph:

We can see the first trip occurring at Point 1, the frequency rapidly falls off. At Point 2, the battery storage and other response systems start to kick in and the frequency recovers a little. At Point 3 Hornsea trips and the frequency drops again. At point 4 the ROCOF relays start kicking in and load is shed – the frequency begins to recover. Beyond this, the various other jumps are when various generators start responding. Important caveat, these points are my interpretation of the points on the graph rather than confirmed data.

Next, let’s have a look at the map of the sites, as shown below from the National Grid database. We can see that Little Barford is a good distance away from Hornsea area. This gives a little credence that the two trips might be unrelated. Personally, I doubt it… in electrical terms 2 minutes is incredibly odd, and sounds like one trip caused the other; the ‘why’ is hard to tell at this stage… the other thing to remember that electrical changes ripples around the system very quickly, and so a few hundred miles only relates to a few milliseconds. Quite why Hornsea tripped will need investigation, but it would be a fair guess to assume that a transient response from Little Barford triggered a protection element on the Hornsea site.

Now we have got that out of the way, is the National Grid response enough? In my view, claiming an unprecedented situation, is a bit unreasonable. The fundamental requirement of any electrical system (particularly at Grid level), is that it has to be able to withstand loss of its largest generator, without causing a major upset. With the large distributed network is such an approach even valid, do National Grid need to consider multiple ‘N-2’ outage scenarios, and sympathetic trips. So, it begs two serious questions:

- Why couldn’t the Grid withstand a staggered (2 minute) trip of 1.4GW?

- What would happen if one of the bigger sites, or interconnectors had tripped?

Once the two generators had tripped, what happened next was the various online generators tried to increase their output, along with the various battery storage systems. These couldn’t produce enough power quickly enough, and the frequency kept falling. At this stage the various under frequency and Rate Of Change (ROCOF) relays started kicking into action. For those who are unfamiliar with these, they detect a falling frequency – which is symptomatic of a shortfall in power generation, and trip key supplies to initiate load shedding. Once the load is shed, the system can start to balance itself again, and other generators can be brought back online.

This raises several further questions:

- Is bulk under-frequency Load-shedding a sensible approach?

- Was there a Lack of Inertia in the System and Would more battery storage solve the Issue?

- Is the G59/G99 approach to preventing mini-islanding from occurring really sensible?

- Why was there so much chaos?

- What will the future bring?

One perhaps subtle point to note, is that the magnitude and speed of the frequency collapse is a mathematical relationship between the generation vs. demand imbalance. If a much smaller generator had tripped the drop would have been smaller and slower, if a much larger generator had tripped then drop would have been sharper.

Load-shedding and Demand Side Response

Load-shedding is the last resort of any power system operator. In effect what the operator does is sacrifice a part of the network, in order to preserve the overall system. As a concept it is essential in a power system, but its implementation is very tricky. Back in the days before distributed generation, top-down based load-shedding worked, in the sense that loads could be tripped in a controlled manner, and only impacted customers, rather than generation plant (with a very few exceptions).

The problem with the modern grid design and approach to a ROCOF load-shedding system, was that although it correctly tripped load, it would also have tripped a lot of the generation connected at 132kV, 33kV and 11kV. So, while the load shedding relays did thier job, and protected the network, by tripping a lot of the smaller generation sites, it probably made the outages faced by customers a lot bigger than they needed to be. As the UKs future heads toward more decentralised, and renewable generation, this issue will become worse.

The other thing to remember, is that load-shedding as an abstract term is fine for engineers to talk about… but it has a very real impact. Load-shedding means intentionally forcing a power cut; for a domestic user this is a nuisance, but for a large industrial site, factory, hospital, rail system it has very serious implications for cost and safety.

Demand Side Response (DSR), is actually pretty similar to load shedding, but the idea is that customers sign up for the ‘service’ and in return get financial compensation. If there is a shortfall in power, they are intentionally tripped off the system first and then reconnected later. This can be done at a small domestic scale like fridges/freezers, electric heaters HVAC, EV charging stations (maybe one for the future) but would be fraught with practical and political difficulties. Implementation at large industrial sites would be simpler, but these would need serious incentives to turn down their power at short notice, and have staff idle and production halted.

There is always a place for load-shedding, but it should be manged effectively with a more intelligent system and active DSR, rather than simple ROCOF schemes. Unplanned consumer disconnection should always be an absolute last resort, and never triggered by loss of a main generator.

System Inertia and Battery Storage

One thing, that has been commented on by many is the lack of inertia in the system and the role of battery storage in responding to the fault. These are related points, but not entirely the same thing. So, let’s try and unpack them a bit.

Firstly, battery storage is not the same as inertia – inertia is, by its nature, an instantaneous system as it is already rotating. Battery Storage is not, it needs a trigger (remember our under-frequency relays from earlier), once triggered a battery response time is around 100ms to 200ms. That might sound like splitting hairs, but it makes a lot of difference in terms of system stability. In a very high inertia system, the battery response rate is probably ok, as the frequency won’t fall fast enough to be a problem… But in low inertia systems, that delay can be the difference in the system staying stable / load shedding or not. In a system with a very high renewable penetration, and low inertia, that delay could be crucial.

For the event on Friday, the battery storage systems performed exactly as planned – there just wasn’t enough of them. Battery Storage systems faced the same problem of embedded generators on the 132kV, 33kV and 11kV networks – ones that were working may have been knocked off by the load-shedding relays.

One interesting take away from this, is that a system based on a number of very large generators is actually more vulnerable, as when the bigger generators trip, the impact is more severe. By using lots of smaller generators (efficiency issues aside), a system operator is spreading their risk, as loss of one generator will be less likely to upset the system. The problem is that large offshore windfarms will now often become one of the largest generators, and by their very nature will always be slightly more vulnerable than an onshore site.

As an aside, one of the key areas where the battery vs rotating inertia really matters is in the system response to bolted 3-phase faults, and the ability of the system to ride through, while the fault is cleared. This is where inertia really matters, and batteries cannot (currently) respond quickly enough.

G59 / G99 Anti-islanding Schemes

One of the fundamental design aspects of the modern UK grid, is that localised generators on the 132kV network and below, must disconnect from the grid in the event of a major system disturbance. This is based on the principle that the DNO / National Grid don’t want mini-generation islands forming. This is where the UK would effectively split from a big system controlled by National Grid, into a mix of system operated by National Grid and the DNOs. The trade off here is between keeping customers connected and the logistical and control nightmare of trying to run multiple power demand islands, and then re-synchronise them when the power system disturbance has stabilised.

Don’t get me wrong, I am not particularly advocating this as a solution, but there could well be room for some serious exploration of policy here. If the UK was to step away from a rigid adherence to 400/275kV ac transmission and start looking at HV DC system this could potentially improve overall stability. The HVDC links could be free to interchange power with each other, but can functionally remain separate if they need to. This is similar to what happens in larger countries like the USA, China and Canada.

For industrial users and private networks that already have embedded generation. There is a tendency to automatically follow the G59/G99 loss of mains disconnection approach and shutdown, but actually most sites could be made to run as an island with a little more design effort. This would allow them to continue to operate, whilst the system disturbance carried on.

Why was there so much Chaos?

Firstly, this was because of the load shedding system, of the underfrequency relays kicking into action, and disconnecting supplies. Outages on a customer network in the UK are rare, so many companies and institutions get a little complacent. In real terms this leads to a lack of investment, lack of maintenance, lack of regular testing and a host of other problems.

For our critical infrastructure (hospital, rail, aviation, banks, IT etc..) it should be a requirement, that they have sufficient backup systems and can continue to operate on loss of power for a certain amount of time. Critical consumers normally have two lines of defence, the first is a UPS and the second are backup generators. UPS’s should provide a bumpless (no-break) power transfer to the protected system to keep it operating without interruption. Whilst standby generators will start and run (hopefully, if properly maintained!) in somewhere between 30s to 2 minutes.

In real terms, what this means is that if design correctly, critical systems should not see any interruption to its continuity of service, and if designed really well the people using the service shouldn’t even know there was a power cut. Less critical systems would experience a short power loss but would be able to function in some capacity.

Backup and standby systems are a classic cost-risk decision. Companies take the view of 1) What is the probability of a power cut? 2) What is the Impact? 3) How much will it cost to mitigate that risk? The trap that many companies fall into is that they assume the risk is negligible as the security of supply in the UK is pretty good, but the cost of equipment is high. So, there is a temptation to downplay the risk and worry too much about the cost. Perhaps converting standby generators to run as an embedded generator might be a solution that critical infrastructure companies need to consider.

The Future and Long Term Solutions?

So, let’s break out the crystal ball and see if we can guess what the future will bring in the short term and long term. What is the solution? There is a saying that if you put two engineers in a room, you will get 5 different opinions. So at this point, the only thing can be certain, there will be a big investigation into the event and ‘questions will be asked’ and ‘fingers will be pointed’!

As a consultant and engineer, it is very easy in these scenarios and sit back and criticise, but equally systematic short fallings in such a critical system cannot be ignored and must be addressed. The current UK policy and European Policy (I haven’t mentioned Brexit once!) is all based on increased renewables and decarbonising power generation. This means that large thermal power stations will continue to go off-line and there will be an increasing penetration of low inertia, limited power response / spinning reserve systems that are not directly controlled by National Grid. DNOs are slowly transitioning to DSOs and the new G99 standard are all steps in the right direction, but there need to be some more joined up thinking and interaction between National Grid and the DNOs.

There will be an inevitable policy review, but whether this actually results in the changes needed will remain to be seen. My guesses for the future policies? In the short term we will probably see battery systems seeing a resurgence in popularity, and increased roll out for coupling with renewable sites. There will probably be a shift in the Capacity Market, moving from half-hourly auctions to auctions at 5 minutes.

Some Short Term Operating Reserve (STOR) and similar sites, might be moved from being kept off line and needing to respond in half an hour, to being kept as hot standby, and having a faster response time. Hopefully this will be lined to incentives provided to large power users, to come under the STOR umbrella and be able to provide Demand Side Reduction.

G99 and the Grid code will probably get a shake up and hopefully some more intelligent and adaptive interactions between National Grid and the DNOs will be developed. Demand Side Response, will hopefully move away from crudely implemented ROCOF relays, with dynamic control systems, so that embedded generators and storage units can actually help support the grid during such as an event.

In the longer term, we are all expecting to see a huge increased in Electric Vehicles (EV) and their associated charging stations. This will see a surge in local demand and usage patterns, which will initially be challenging for the DNOs. But this could also be a huge opportunity for adding a large amount of battery storage to the grid in an intelligent way. EVs have the opportunity to provide bi-directional power flow, known as Vehicle to Grid (V2G). The other big change that is less well known about is the new buildings requirement for new homes to be gas-free and provided with electric heaters; this will add a significant load onto the electrical network.

This is a simple concept where the vehicle connected to a charger, is requested to provide power back into the grid. This would allow potentially a large fleet of cars and lorries to act as mini battery storage systems and significantly enhance the overall security. The only problem with this approach is that it could be particularly tricky to implement in a controlled and managed fashion, but perhaps a simple solution would be our favourite ROCOF relays.

As renewable, low inertia systems become more prevalent getting around system inertia, and improving transient response will become one of the big hurdles of modern power systems, especially as low inertia generation increases. New CCGT build is possible but is an old technology that isn’t really cost-effective in the UK. Nuclear, well who knows, it could potentially be the best solution, the technology is solid, but a cost-effective implementation appears to be beyond us in the UK. How will the problem of low inertia be solved, this might be through a mix of operating an increased spinning/static reserve, more and faster acting battery storage systems, synchronous condensers, flywheels or so on.

There are other renewable technologies that are technically ready, but commercially unattractive, such as the various tidal barrage systems. With a strong push of support from government, these schemes could become viable and act as flexible generation that can double as pumped storage, whilst adding the much-needed rotating inertia to the system. Other renewable technologies such as floating solar farms and wave power are struggling to be deployed due to lack of investment and long-term thinking. Wavehub, in Cornwall was a brave and bold idea, but has been sadly under-used and subject to hostility.

Another long-term solution might be the steady roll out of DC based transmission systems and home DC networks and microgrids, these can potentially make significant changes to the overall power consumption and control of the electrical systems, by allowing dynamic control of regional areas. Perhaps this is unfeasible for a small country like the UK, but perhaps it could be the long term solution we need.

In summary, the UK blackout represented a major failure in UK policy, but let’s try and learn from it and use it to improve policy as we aim to decarbonise our electrical energy systems. The blackout could be used as a springboard to implement a host of changes to the UK policy, which would enable to the UK to push to the forefront of an energy efficient and low carbon future.